Decoding ChatGPT: How 5 Breakthroughs Led to ChatGPT

Intro

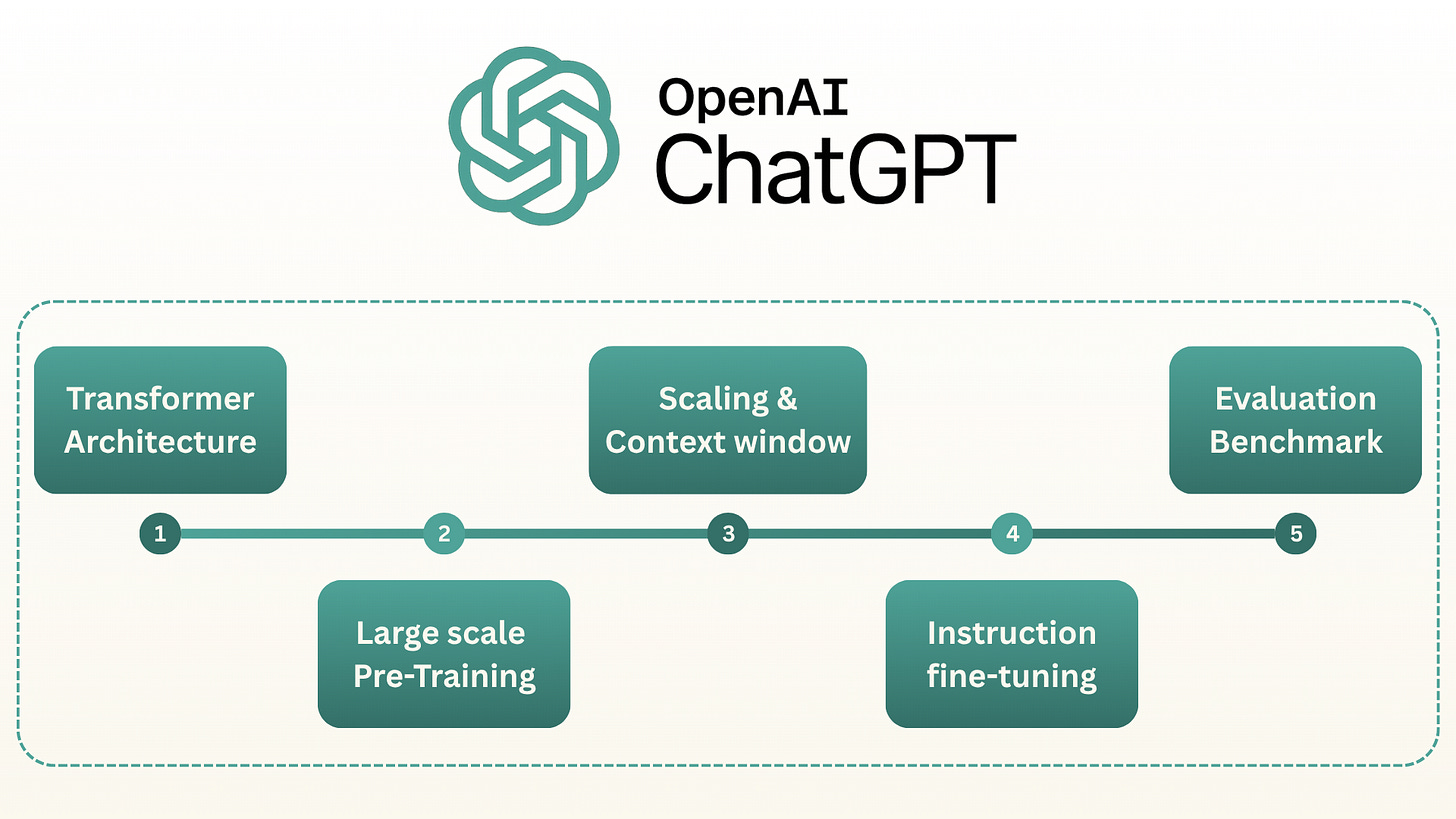

Following our exploration of the six core deep learning architectures that have shaped artificial intelligence, we now turn our attention to the major research breakthroughs that laid the groundwork for today’s large language models and the latest ChatGPT models. This article covers the timeline of research from the introduction of the Transformer architecture in 2017 up to the public release of ChatGPT (GPT-3.5 series) in late 2022. In our subsequent article, we will build upon this foundation, focusing on the advancements from GPT-3.5 to the present.

The release of ChatGPT in late 2022 was a truly pivotal event, fundamentally altering perceptions of artificial intelligence by bringing advanced AI capabilities directly to the public for the first time and making it accessible to millions. This unprecedented exposure sparked a massive wave of investment, ignited new research frontiers, and began to fundamentally shift the global understanding of what constitutes repetitive or even unskilled work that could be automated. Furthermore, it initiated a dramatic shift in the paradigm of human-computer interaction, demonstrating the power of natural language interfaces and hinting at a future where English, or other human languages, could become a new form of programming language, enabling broader interaction with complex systems.

In this article we will cover 5 key advancements which resulted in the success of ChatGPT.

1. Transformer Architecture (2017)

Brief Introduction

The Transformer architecture was introduced in 2017 by Google researchers in the paper, Attention Is All You Need. This architecture departed from the traditional recurrent and convolutional approaches used for sequence modeling (e.g., LSTM and CNN-based models) and instead leveraged attention mechanisms to process input sequences in parallel. By doing so, it effectively removed many of the bottlenecks associated with recurrent approaches and quickly became the basis for modern large language models.

Foundational Research

Key Contributions:

The multi-head self-attention mechanism, which allows the model to learn contextual relationships between tokens at different positions in parallel.

Positional encoding to retain information about the order of tokens without relying on recurrence.

How It Improved on Previous Approaches

Parallelization: By eliminating recurrence, Transformers can process input sequences in parallel, making training significantly faster on GPUs or TPUs.

Long-range Dependence: Self-attention allows the model to capture dependencies between any pair of positions in the sequence, regardless of their distance.

Modularity: The encoder-decoder structure is flexible and can be easily extended to various tasks such as translation, text classification, summarization, and beyond.

2. Large-Scale Pre-Training

Brief Introduction

Large-scale pre-training involves training a model on vast amounts of unlabeled text data before fine-tuning it on a smaller, task-specific dataset. This approach took center stage in NLP thanks to models such as BERT and GPT. It laid the groundwork for language models that could learn rich linguistic and world knowledge representations, accelerating improvements across a variety of downstream tasks (A downstream task is a subsequent task or goal that relies on the outcome of a preceding process. For example, using pre-trained language model for sentiment analysis).

Foundational Research

GPT (Generative Pre-Training):

Improving Language Understanding by Generative Pre-Training (OpenAI, 2018)

Demonstrated that a transformer-based language model trained generatively on large unlabeled text corpora could adapt well to specific tasks with minimal changes.

BERT (Bidirectional Encoder Representations from Transformers):

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (Devlin et al., 2018)

Popularized a bidirectional transformer encoder fine-tuned for various NLP tasks, achieving state-of-the-art results on benchmarks like GLUE, MultiNLI and SQuAD.

How It Improved on Previous Approaches

Transfer Learning: Pre-trained models provided robust features, improving performance on a range of downstream tasks with comparatively little labeled data.

Efficiency: Instead of training separate models from scratch for each new task, researchers could take one large pre-trained model and fine-tune it, saving time and resources.

Generalization: Large-scale pre-training exposed models to diverse linguistic contexts, enabling better understanding and generation capabilities across many tasks.

3. Scaling and Context Window Expansion

Brief Introduction

After BERT and GPT’s success, researchers realized that performance continued to improve as models were scaled in both size (number of parameters) and the length of context they could handle. GPT-2, GPT-3, and other large language models (LLMs) demonstrated dramatic improvements when trained on larger datasets and with more parameters, highlighting the importance of model scaling. In parallel, an increased context window allowed these models to handle longer pieces of text and more complex tasks.

Foundational Research

GPT-2 (2019):

Language Models are Unsupervised Multitask Learner (Radford et al., 2019)

Showed that significantly larger language models trained on vast amounts of internet data exhibit emergent properties such as coherent text generation, zero-shot task transfer, and more.

Approximately 10x the size of its predecessor GPT, marked a key step in realizing the potential of scaled models.

GPT-3 (2020):

Language Models are Few-Shot Learners (Brown et al., 2020)

Solidified the few-shot learning paradigm, where GPT-3 could solve new NLP tasks with minimal examples or instructions in the prompt.

Contains about 175 billion parameters which is 100 times larger than GPT-2

Scaling Laws for Neural Language Models:

Scaling Laws for Neural Language Models (Kaplan et al., 2020)

Demonstrated empirical power-law relationships between model performance, number of parameters, dataset size, and amount of compute.

Showed that benefits from increasing scale follow predictable curves—guiding researchers on how to allocate compute versus data to maximize returns.

How It Improved on Previous Approaches

Model Size and Data Scale: Larger models trained on bigger corpora demonstrated more sophisticated language understanding and generation (often referred to as “emergent abilities”).

Few-Shot and Zero-Shot Learning: GPT-3’s capacity for in-context learning showed that large models can adapt to novel tasks using only a handful of examples, reducing the need for extensive labeled data.

Context Window Expansion: By increasing the maximum sequence length, models handled more extensive context, enabling complex tasks like multi-turn dialogue, summarizing longer documents, and more nuanced reasoning.

Predictive Scaling: The scaling-laws framework gave researchers quantitative guidance—predicting how much additional compute or data would improve a model, thus making scaling efforts more efficient and cost-effective.

4. Instruction Fine-Tuning and RLHF

Brief Introduction

As LLMs grew in size and capability, it became apparent that aligning them with human intent and values was crucial to producing helpful and reliable outputs. Instruction fine-tuning involves training models to follow higher-level instructions (e.g., “explain your reasoning,” “answer politely”), while RLHF (Reinforcement Learning from Human Feedback) refines a model’s behavior by optimizing for responses that humans rate more favorable and safer.

Foundational Research

InstructGPT:

Training Language Models to Follow Instructions with Human Feedback (OpenAI, 2022)

Showed that fine-tuning large language models with a preference model derived from human annotations greatly improves the helpfulness and safety of responses.

Fine-Tuning pipeline:

Supervised Fine-Tuning (SFT): The model is first trained on a dataset of human-written question–answer pairs or instruction–response examples, teaching it to map instructions to high-quality outputs in a supervised setting.

Reward Modeling (RM): Human annotators rank several model outputs for the same prompt; these rankings are used to train a separate reward model that can predict human preferences over new outputs.

RL Fine-Tuning: Using the reward model as a proxy for human judgment, policy optimization (e.g., PPO) adjusts the base model’s parameters to maximize expected reward, yielding a final model better aligned with human preferences.

How It Improved on Previous Approaches

Human-Centered Alignment: By integrating human feedback, models generated responses aligned more closely with user intent and societal values.

Better Quality Outputs: Instruction tuning helped reduce hallucinations, improve factual accuracy (to some extent), and enhance the clarity and relevance of generated text.

Safety and Ethics: Regular feedback loops allowed developers to better handle sensitive topics and mitigate toxic or harmful outputs, although ongoing work continues to address these challenges fully.

5. Evaluation Benchmark

Brief Introduction

As language models grew rapidly in capability, the methods for evaluating them also needed to evolve. Traditional benchmarks often focused on performance after task-specific fine-tuning. However, the emergence of models like GPT-2 and especially GPT-3 highlighted new abilities, such as zero-shot and few-shot task generalization through "in-context learning." Consequently, the evaluation methods expanded to include a wider array of benchmarks designed to test not just task-specific accuracy but also broader reasoning, knowledge recall, commonsense understanding, and the ability to adapt to novel tasks with minimal examples.

Foundational Research

The GPT-3 paper itself represents a foundational shift in evaluation methodology by systematically assessing performance across a diverse suite of existing benchmarks under zero-shot, one-shot, and few-shot conditions, without gradient updates or fine-tuning. Key benchmarks and evaluation paradigms emphasized during this period include:

Multi-Task Benchmarks (GLUE & SuperGLUE):

SuperGLUE: A Stickier Benchmark for General-Purpose Language Understanding Systems (Wang et al., 2019)

While originally designed for fine-tuning, models like GPT-3 were evaluated on these benchmarks in few-shot settings to measure generalization across tasks like natural language inference (NLI), coreference resolution, and sentence completion.

Question Answering (QA):

Datasets like TriviaQA (Joshi et al., 2017), WebQuestions (Berant et al., 2013), and Natural Questions (Kwiatkowski et al., 2019) became crucial.

A significant development was evaluating models in a "closed-book" setting (popularized by Roberts et al., 2020), testing the model's ability to recall knowledge stored in its parameters without access to external documents, contrasting with traditional "open-book" QA.

Commonsense Reasoning:

Benchmarks like the Winograd Schema Challenge (Levesque et al., 2012), HellaSwag (Zellers et al., 2019), PIQA (Bisk et al., 2019), and ARC (Clark et al., 2018) tested models' understanding of everyday situations and physical interactions.

Reading Comprehension:

Datasets like CoQA (Reddy et al., 2019) for conversational reading comprehension and DROP (Dua et al., 2019) for discrete reasoning (e.g., arithmetic) within text pushed models beyond simple span extraction.

How It Improved on Previous Approaches

Measuring Generalization and Adaptation: The shift towards zero/few-shot evaluation provided a more direct measure of a model's ability to generalize its pre-trained knowledge and adapt on-the-fly (in-context learning), moving beyond task-specific fine-tuning performance.

Broader Skill Assessment: Using a diverse suite of benchmarks (QA, reasoning, translation, arithmetic, etc.) provided a more holistic view of model capabilities and limitations compared to focusing on a single aggregate score like GLUE.

Highlighting Emergent Abilities: Evaluating large models on challenging tasks revealed emergent capabilities (like few-shot learning itself, or arithmetic) that were not apparent in smaller models, driving research into scaling effects.

Identifying Model Weaknesses: Rigorous evaluation across varied tasks (as done extensively in the GPT-3 paper) clearly identified areas where even large models struggled (e.g., certain types of reasoning, NLI tasks like ANLI), guiding future research directions.

GPT‑3.5 (Late 2022)

Key Development:

The launch of ChatGPT in late 2022, built on the GPT-3.5 model, brought together the key research breakthroughs we've discussed. Its impressive success wasn't about one new discovery, but rather how these earlier advancements were innovatively combined, giving the public a powerful, early look at what artificial general intelligence might become.

Impact on the world:

Marked an inflection point in public awareness and adoption of AI.

It demonstrated the potential to automate or augment tasks in content creation, software development (code generation/debugging), customer service, education, general business productivity (summarization, drafting) and more.

Sparked widespread discussion on AI's potential to disrupt industries, automate work, and change human-computer interaction. It catalyzed a massive surge in investment and competitive activity across the AI landscape.

Increased Focus on AI Ethics and Safety. The public availability highlighted critical issues around bias, misinformation, intellectual property, and potential societal impacts, accelerating discussions and research into AI governance and safety.

Conclusion

From the introduction of the Transformer architecture in 2017 to ChatGPT’s release in 2022, the field of large language models underwent a dramatic evolution through five main steps:

Transformer Architecture (2017): Replaced recurrent approaches with self-attention, enabling parallel processing and better long-range context handling.

Large-Scale Pre-Training: Demonstrated that models pre-trained on vast text corpora could learn powerful representations transferable to many downstream tasks.

Scaling and Context Window Expansion: Proved that performance and capabilities increase markedly with more parameters and bigger context windows.

Instruction Fine-Tuning and RLHF: Ensured models produce more helpful, aligned, and human-like responses through data curation and user feedback loops.

Evaluation Benchmark: Pushed models to evolve through rigorous testing frameworks that measure reasoning, factual knowledge, and real-world problem-solving skills.

In this article, we covered the timeline of progress from the introduction of the Transformer architecture in 2017 up to the release of ChatGPT in late 2022. The second part will cover developments from ChatGPT to the present, highlighting the advancements that enabled the next generation of language models. Expect deeper dives into emerging evaluation benchmarks, more efficient fine-tuning techniques, mixture-of-experts architectures, chain-of-thought reasoning, model distillation, and much more. Subscribe to get the second part and stay up to date.

Very well-written. Thanks for adding the references.

This is a great resource! Thanks for taking the time to put this together.